Company Overview August 2023

W H O W E A R E Applied Digital (NASDAQ: APLD) is a U.S. based operator of next- generation digital infrastructure, providing cost-competitive solutions to High-Performance Compute (HPC) and Artificial Intelligence (AI).

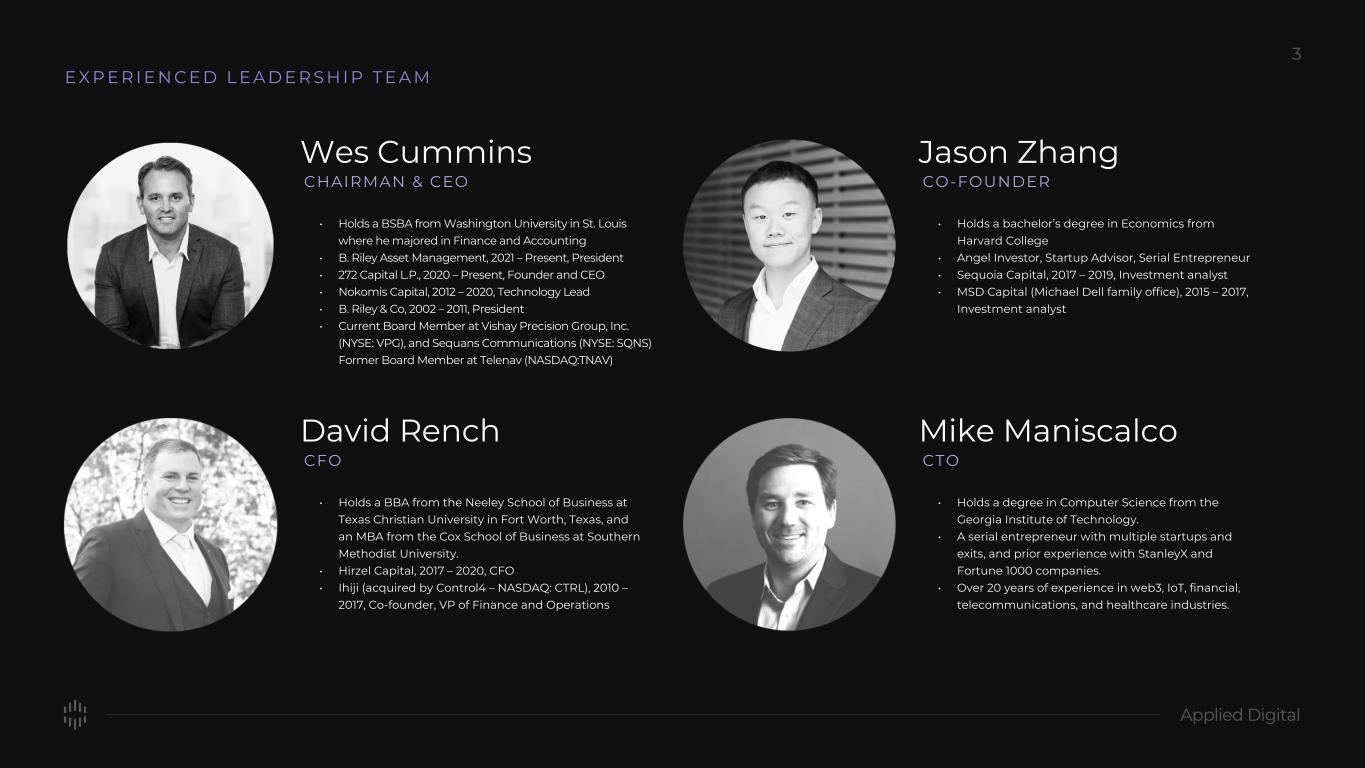

E X P E R I E N C E D L E A D E R S H I P T E A M Wes Cummins CHAIRMAN & CEO • Holds a BSBA from Washington University in St. Louis where he majored in Finance and Accounting • B. Riley Asset Management, 2021 – Present, President • 272 Capital L.P., 2020 – Present, Founder and CEO • Nokomis Capital, 2012 – 2020, Technology Lead • B. Riley & Co, 2002 – 2011, President • Current Board Member at Vishay Precision Group, Inc. (NYSE: VPG), and Sequans Communications (NYSE: SQNS) Former Board Member at Telenav (NASDAQ:TNAV) Jason Zhang CO-FOUNDER • Holds a bachelor’s degree in Economics from Harvard College • Angel Investor, Startup Advisor, Serial Entrepreneur • Sequoia Capital, 2017 – 2019, Investment analyst • MSD Capital (Michael Dell family office), 2015 – 2017, Investment analyst David Rench CFO • Holds a BBA from the Neeley School of Business at Texas Christian University in Fort Worth, Texas, and an MBA from the Cox School of Business at Southern Methodist University. • Hirzel Capital, 2017 – 2020, CFO • Ihiji (acquired by Control4 – NASDAQ: CTRL), 2010 – 2017, Co-founder, VP of Finance and Operations Mike Maniscalco CTO • Holds a degree in Computer Science from the Georgia Institute of Technology. • A serial entrepreneur with multiple startups and exits, and prior experience with StanleyX and Fortune 1000 companies. • Over 20 years of experience in web3, IoT, financial, telecommunications, and healthcare industries.

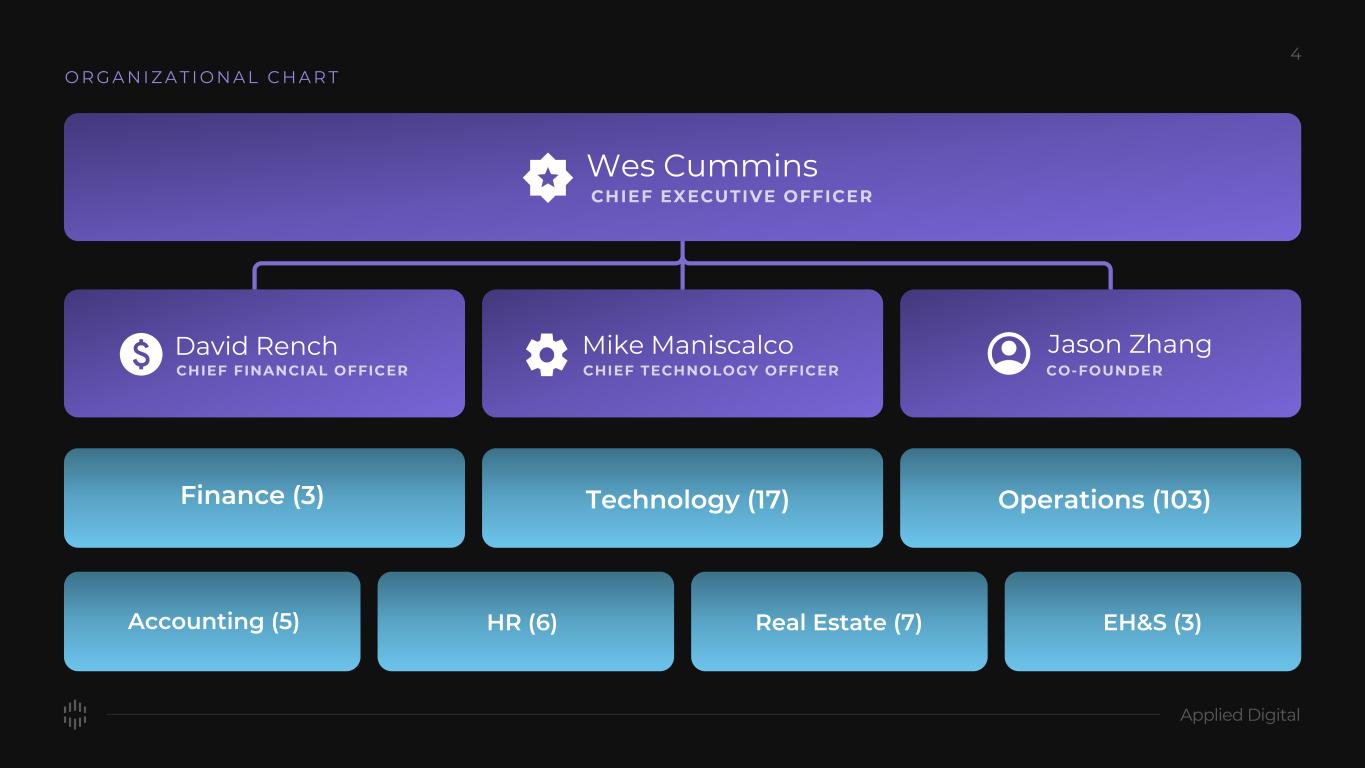

O R G A N I Z A T I O N A L C H A R T Wes Cummins David Rench Mike Maniscalco Jason Zhang Operations (103) Real Estate (7)HR (6) Finance (3) EH&S (3) Technology (17) Accounting (5)

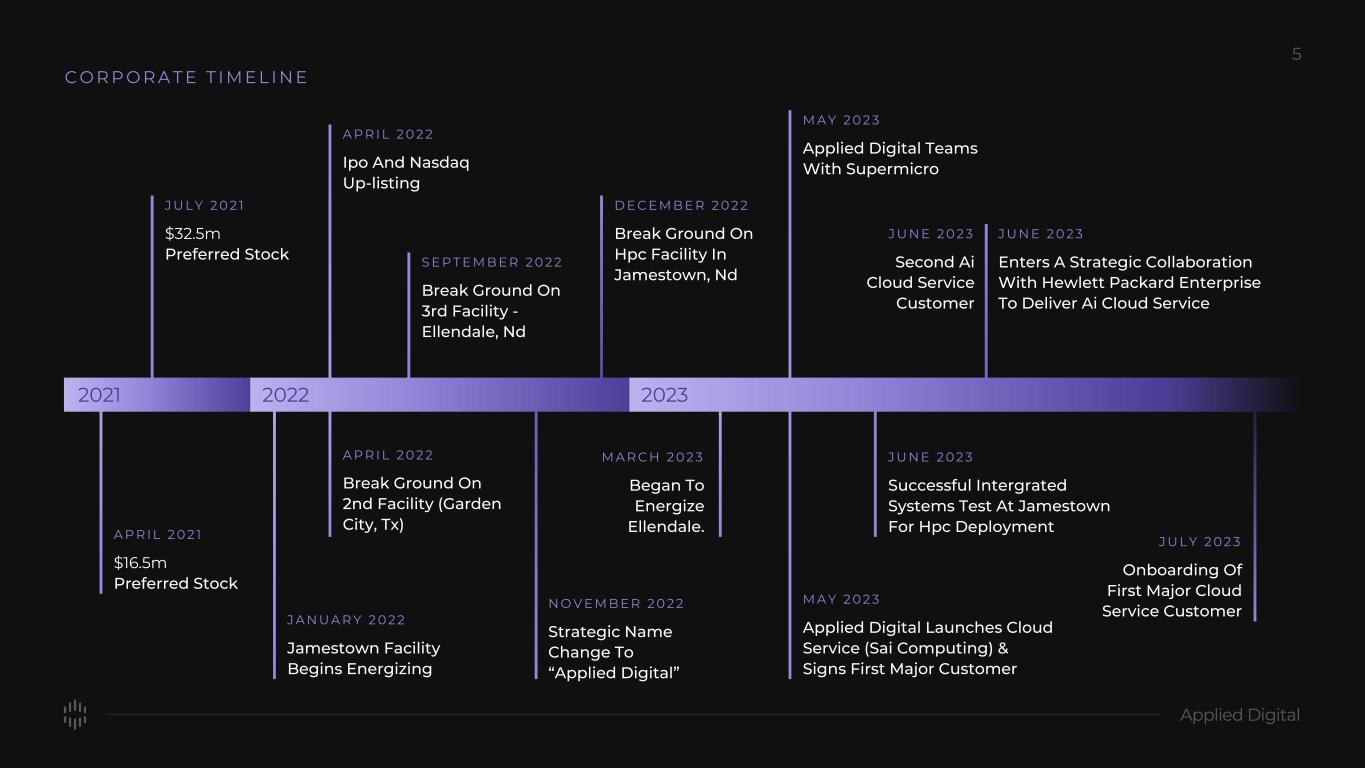

C O R P O R A T E T I M E L I N E $16.5m Preferred Stock A P R I L 2 0 2 1 Jamestown Facility Begins Energizing J A N U A R Y 2 0 2 2 Applied Digital Launches Cloud Service (Sai Computing) & Signs First Major Customer M A Y 2 0 2 3 Break Ground On 2nd Facility (Garden City, Tx) A P R I L 2 0 2 2 Strategic Name Change To “Applied Digital” N O V E M B E R 2 0 2 2 Ipo And Nasdaq Up-listing A P R I L 2 0 2 2 Break Ground On 3rd Facility - Ellendale, Nd S E P T E M B E R 2 0 2 2 Break Ground On Hpc Facility In Jamestown, Nd D E C E M B E R 2 0 2 2 Began To Energize Ellendale. M A R C H 2 0 2 3 Enters A Strategic Collaboration With Hewlett Packard Enterprise To Deliver Ai Cloud Service J U N E 2 0 2 3 Second Ai Cloud Service Customer J U N E 2 0 2 3 Applied Digital Teams With Supermicro M A Y 2 0 2 3 Onboarding Of First Major Cloud Service Customer J U L Y 2 0 2 3 $32.5m Preferred Stock J U L Y 2 0 2 1 Successful Intergrated Systems Test At Jamestown For Hpc Deployment J U N E 2 0 2 3 20222021 2023

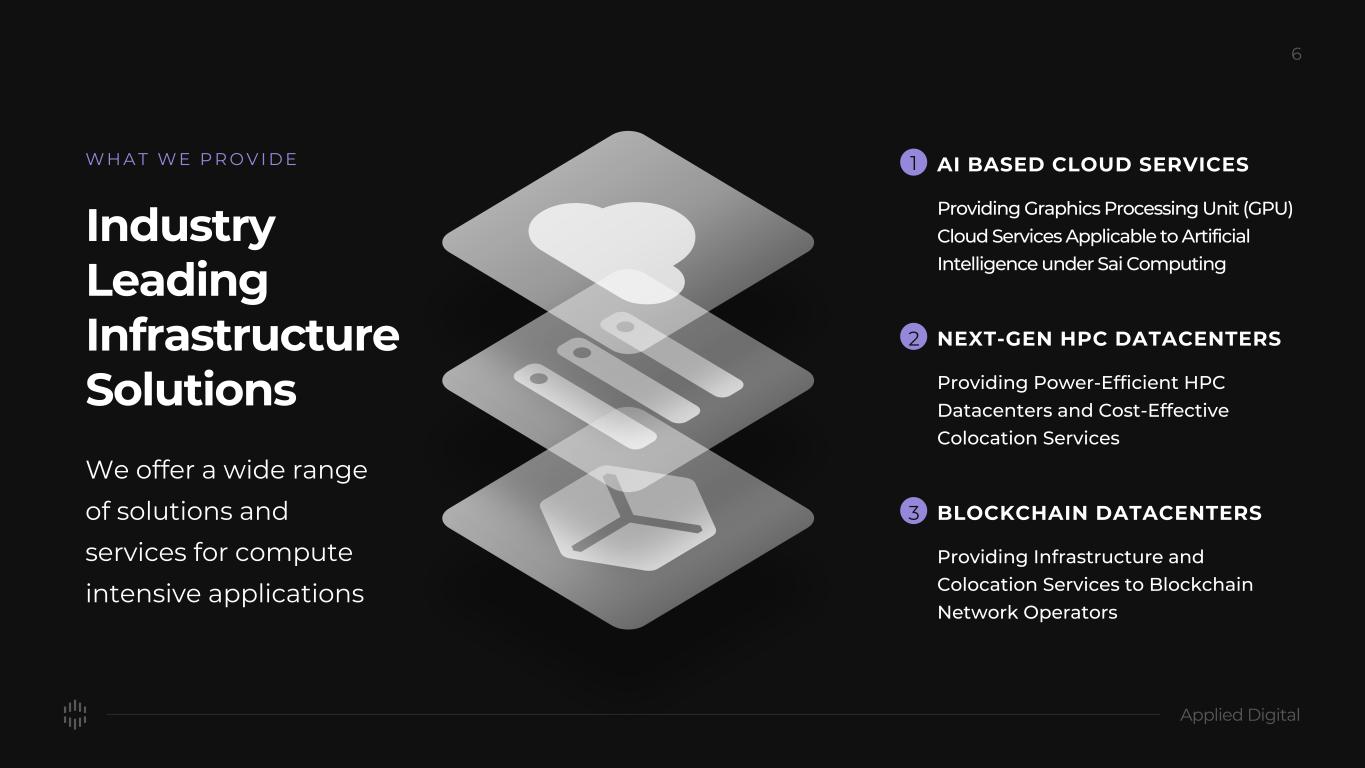

W H A T W E P R O V I D E Industry Leading Infrastructure Solutions We offer a wide range of solutions and services for compute intensive applications AI BASED CLOUD SERVICES1 Providing Graphics Processing Unit (GPU) Cloud Services Applicable to Artificial Intelligence under Sai Computing NEXT-GEN HPC DATACENTERS2 Providing Power-Efficient HPC Datacenters and Cost-Effective Colocation Services BLOCKCHAIN DATACENTERS3 Providing Infrastructure and Colocation Services to Blockchain Network Operators

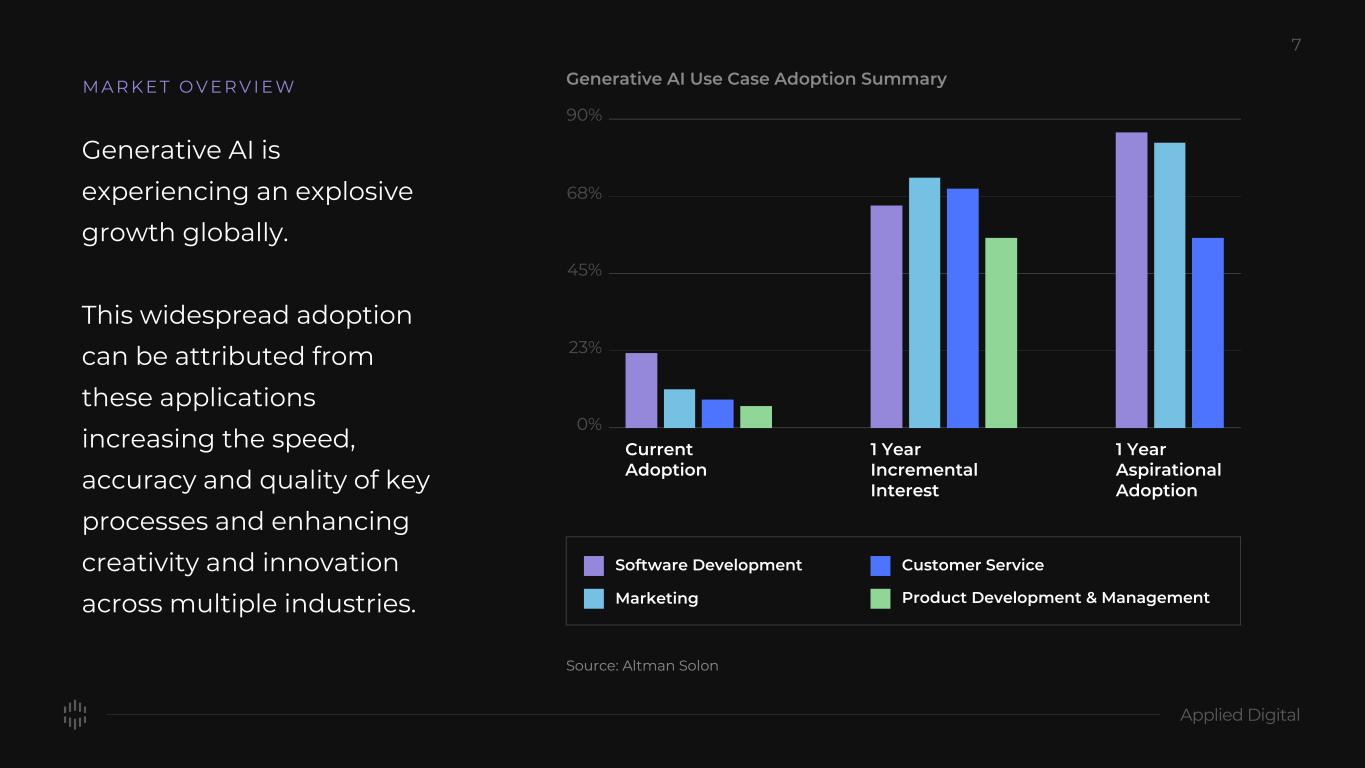

Generative AI is experiencing an explosive growth globally. This widespread adoption can be attributed from these applications increasing the speed, accuracy and quality of key processes and enhancing creativity and innovation across multiple industries. M A R K E T O V E R V I E W Current Adoption 1 Year Aspirational Adoption 1 Year Incremental Interest Software Development Customer Service Marketing Product Development & Management

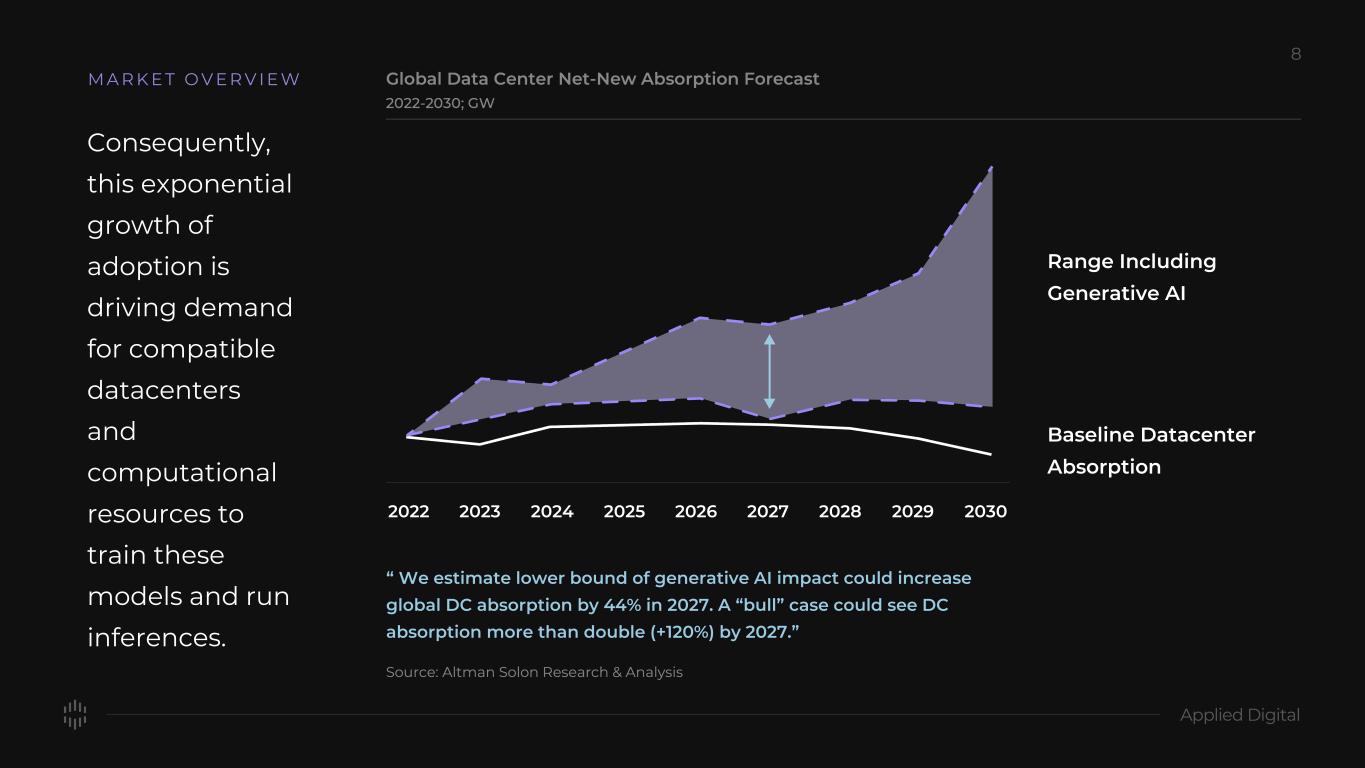

M A R K E T O V E R V I E W Consequently, this exponential growth of adoption is driving demand for compatible datacenters and computational resources to train these models and run inferences. 2022 2023 2024 2025 2026 2027 2028 2029 2030 Range Including Generative AI Baseline Datacenter Absorption “ We estimate lower bound of generative AI impact could increase global DC absorption by 44% in 2027. A “bull” case could see DC absorption more than double (+120%) by 2027.”

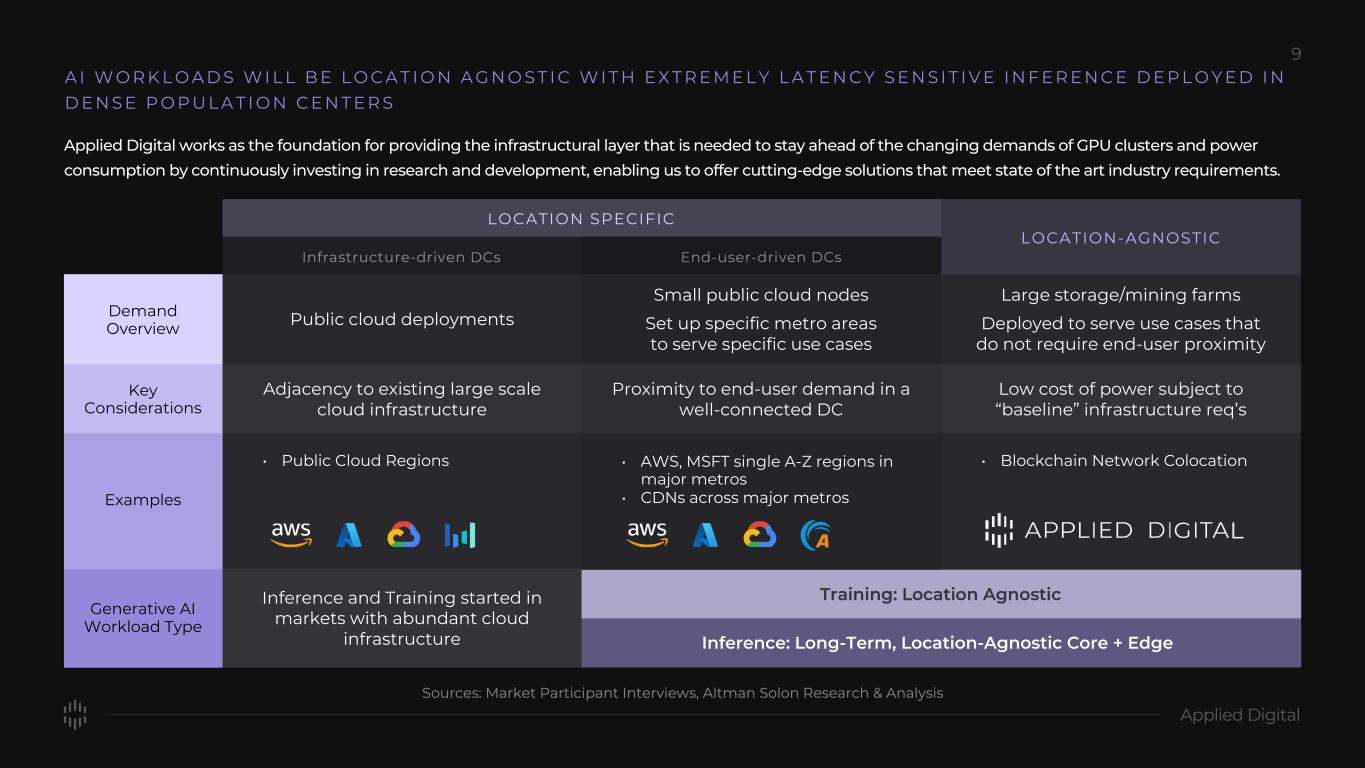

Applied Digital works as the foundation for providing the infrastructural layer that is needed to stay ahead of the changing demands of GPU clusters and power consumption by continuously investing in research and development, enabling us to offer cutting-edge solutions that meet state of the art industry requirements. Demand Overview Key Considerations Examples Generative AI Workload Type Public cloud deployments Adjacency to existing large scale cloud infrastructure Inference and Training started in markets with abundant cloud infrastructure • Public Cloud Regions • AWS, MSFT single A-Z regions in major metros • CDNs across major metros • Blockchain Network Colocation Proximity to end-user demand in a well-connected DC Low cost of power subject to “baseline” infrastructure req’s Small public cloud nodes Set up specific metro areas to serve specific use cases Large storage/mining farms Deployed to serve use cases that do not require end-user proximity LOCATION SPECIFIC LOCATION-AGNOSTIC Inference: Long-Term, Location-Agnostic Core + Edge A I W O R K L O A D S W I L L B E L O C A T I O N A G N O S T I C W I T H E X T R E M E L Y L A T E N C Y S E N S I T I V E I N F E R E N C E D E P L O Y E D I N D E N S E P O P U L A T I O N C E N T E R S

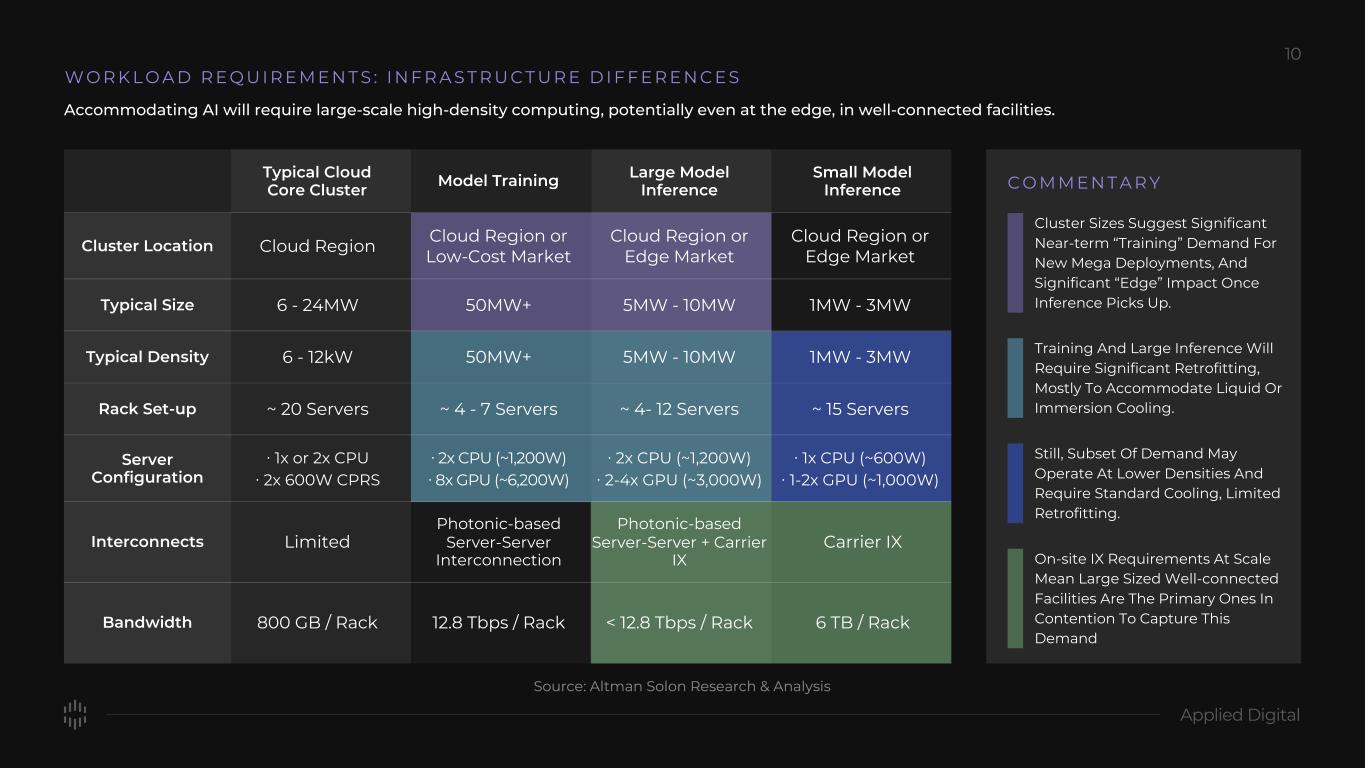

W O R K L O A D R E Q U I R E M E N T S : I N F R A S T R U C T U R E D I F F E R E N C E S Accommodating AI will require large-scale high-density computing, potentially even at the edge, in well-connected facilities. Cluster Location Typical Size Typical Density Rack Set-up Server Configuration Interconnects Bandwidth Typical Cloud Core Cluster Cloud Region Cloud Region or Low-Cost Market Cloud Region or Edge Market Cloud Region or Edge Market 6 - 24MW 6 - 12kW ~ 20 Servers Limited 800 GB / Rack ∙ 1x or 2x CPU ∙ 2x 600W CPRS ∙ 2x CPU (~1,200W) ∙ 8x GPU (~6,200W) ∙ 2x CPU (~1,200W) ∙ 2-4x GPU (~3,000W) ∙ 1x CPU (~600W) ∙ 1-2x GPU (~1,000W) 50MW+ 50MW+ ~ 4 - 7 Servers Photonic-based Server-Server Interconnection 12.8 Tbps / Rack 5MW - 10MW 5MW - 10MW ~ 4- 12 Servers Photonic-based Server-Server + Carrier IX < 12.8 Tbps / Rack 1MW - 3MW 1MW - 3MW ~ 15 Servers Carrier IX 6 TB / Rack Model Training Large Model Inference Small Model Inference C O M M E N T A R Y Cluster Sizes Suggest Significant Near-term “Training” Demand For New Mega Deployments, And Significant “Edge” Impact Once Inference Picks Up. Training And Large Inference Will Require Significant Retrofitting, Mostly To Accommodate Liquid Or Immersion Cooling. Still, Subset Of Demand May Operate At Lower Densities And Require Standard Cooling, Limited Retrofitting. On-site IX Requirements At Scale Mean Large Sized Well-connected Facilities Are The Primary Ones In Contention To Capture This Demand

AI Cloud Services

Sai Computing, a wholly-owned subsidiary of Applied Digital, offers cloud services that provide high-performance computing power for AI applications, including large language model training, inference, graphics rendering, and more.

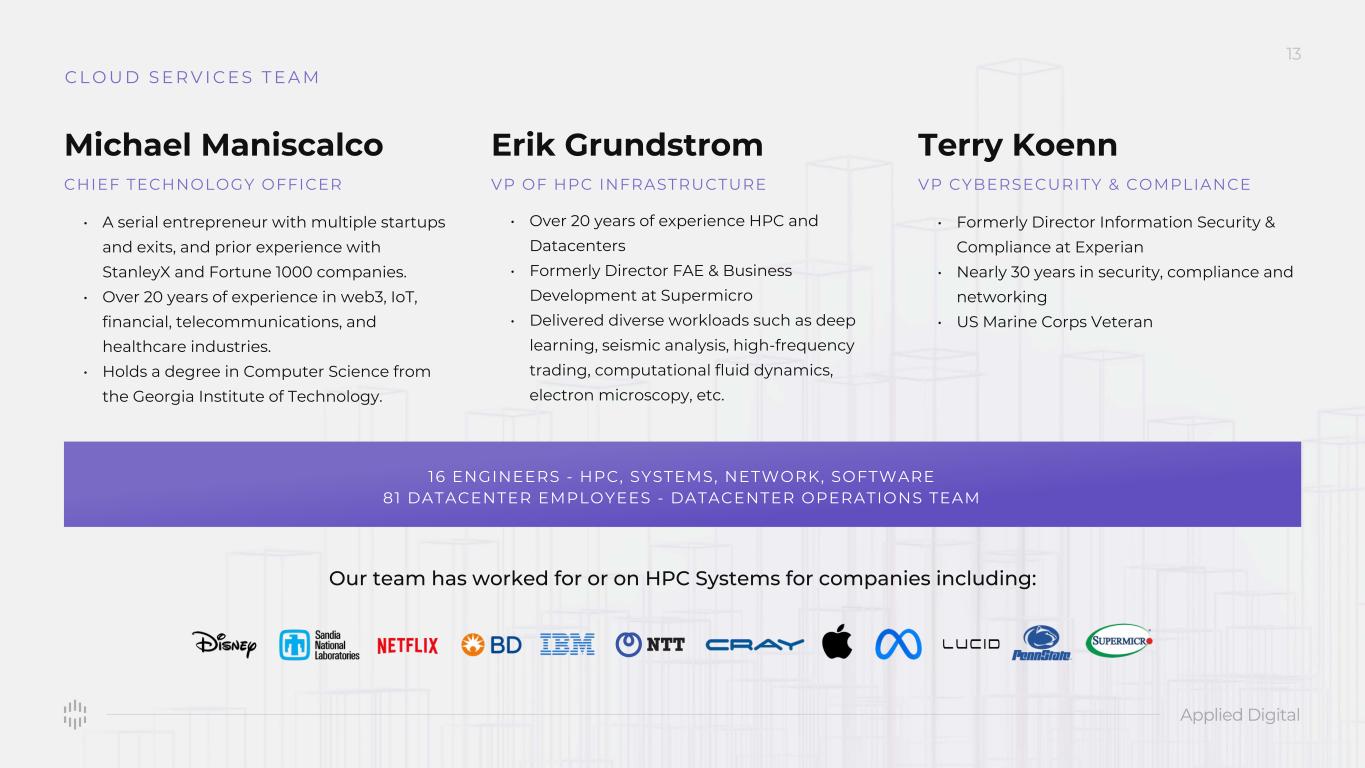

C L O U D S E R V I C E S T E A M • A serial entrepreneur with multiple startups and exits, and prior experience with StanleyX and Fortune 1000 companies. • Over 20 years of experience in web3, IoT, financial, telecommunications, and healthcare industries. • Holds a degree in Computer Science from the Georgia Institute of Technology. Michael Maniscalco CHIEF TECHNOLOGY OFFICER Erik Grundstrom VP OF HPC INFRASTRUCTURE • Over 20 years of experience HPC and Datacenters • Formerly Director FAE & Business Development at Supermicro • Delivered diverse workloads such as deep learning, seismic analysis, high-frequency trading, computational fluid dynamics, electron microscopy, etc. VP CYBERSECURITY & COMPLIANCE Terry Koenn • Formerly Director Information Security & Compliance at Experian • Nearly 30 years in security, compliance and networking • US Marine Corps Veteran 16 ENGINEERS - HPC, SYSTEMS, NETWORK, SOFTWARE 81 DATACENTER EMPLOYEES - DATACENTER OPERATIONS TEAM Our team has worked for or on HPC Systems for companies including:

The Bare Metal Service model gives a gold standard to a deployment as a purpose-built dedicated physical infrastructure to a client's on-site server room. This service offers a full stack of network, storage, compute in one deployment with a cloud-like capability to deliver hardware at the speed of software. Bare Metal GPU Cloud Services 1. Dedicated Systems Resources 2. Reduction of the Noisy Neighbor effect in Datacenter deployments 3. Faster Deployments and Scalability 4.Ability to interchange between Training > Inference Models 5. Ability to switch hardware – more dynamic 6. Custom Access – Direct IP, API Bare Metal Advantages:

Performance and Reliability • Unparalleled Processing Power: Our GPU Cloud boasts state-of-the-art Graphics Processing Units (GPUs) that deliver unmatched computational power. With thousands of cores and high memory bandwidth, these GPUs can handle massive parallel processing tasks, enabling swift execution of data-intensive operations. APLD Infrastructure and Sai Services Designed for Performance, High Availability, Security and Reliability. • Data Security and Privacy: We prioritize the security and privacy of your data. Our GPU Cloud employs industry-leading encryption standards and follows strict compliance protocols to safeguard sensitive information from unauthorized access or breaches. • Reliability and Uptime: We understand that mission-critical applications cannot afford downtime. That is the reason why our GPU Cloud is built on a robust and fault-tolerant architecture. Redundancy measures, load balancing, and failover mechanisms ensure high availability, minimizing the risk of service disruptions. • Optimized for AI and ML Workloads: AI and machine learning tasks often require iterative training processes that demand significant computing resources. Our GPU Cloud is tuned to efficiently handle such workloads, reducing training times and improving the accuracy of models. • Expert Support and Monitoring: Our team of experienced professionals is dedicated to providing high standard support and monitoring services. From initial setup to ongoing maintenance, we are committed to assisting you throughout your journey on our GPU Cloud.

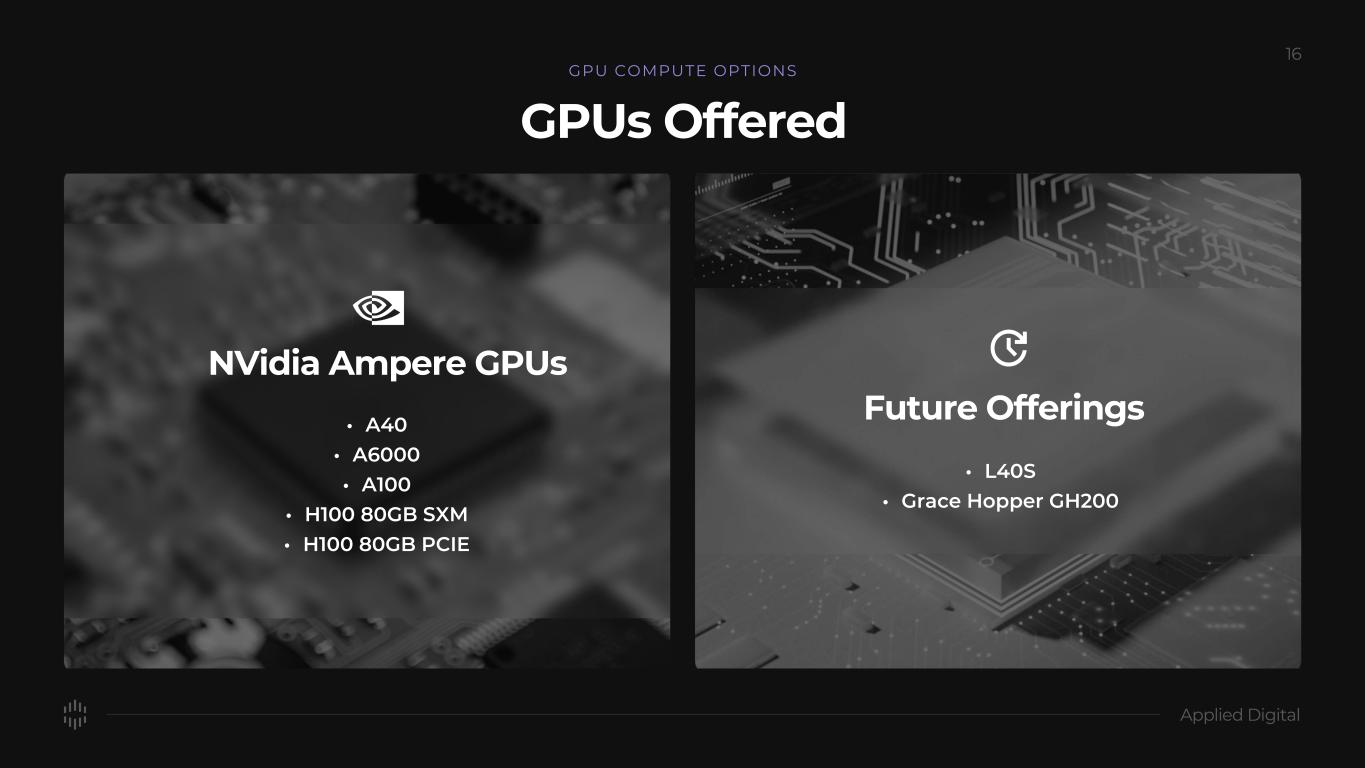

NVidia Ampere GPUs • A40 • A6000 • A100 • H100 80GB SXM • H100 80GB PCIE Future Offerings • L40S • Grace Hopper GH200 GPUs Offered GPU COMPUTE OPTIONS

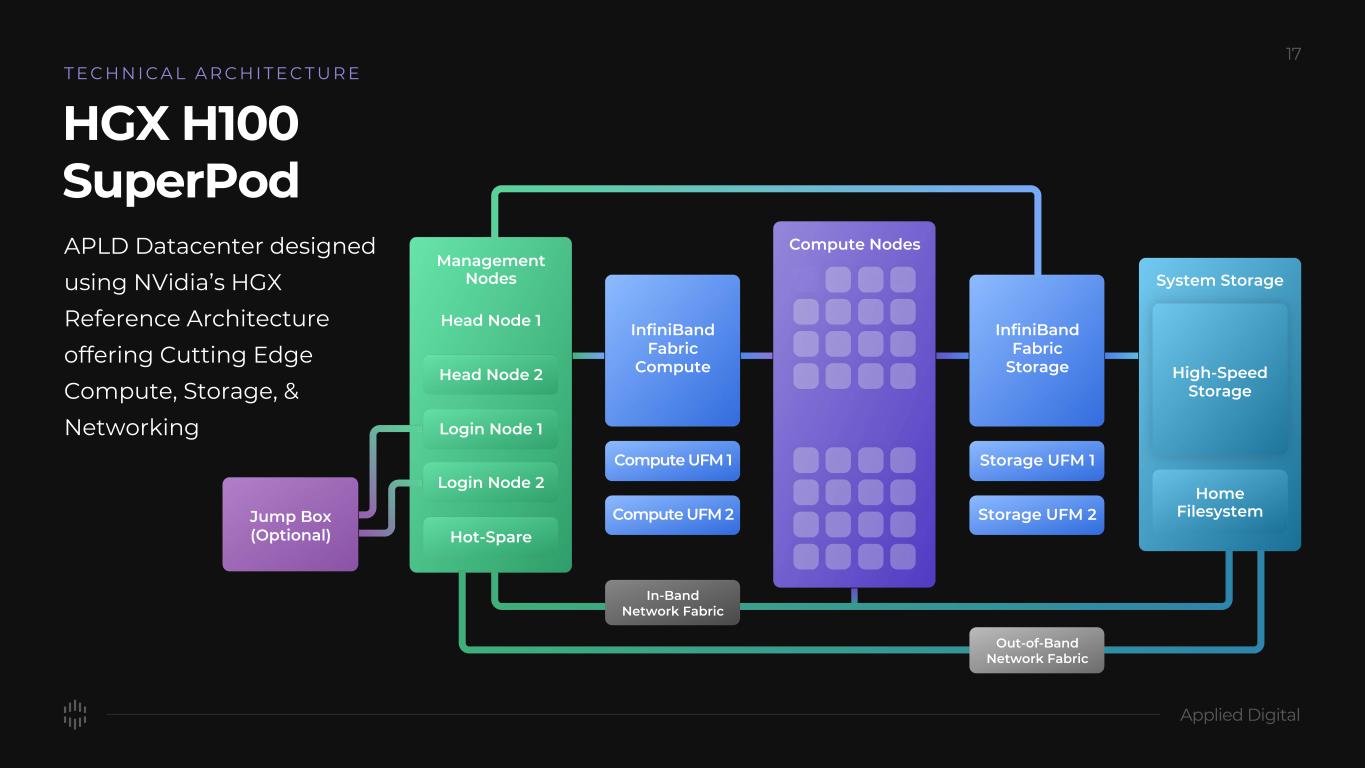

InfiniBand Fabric Storage Storage UFM 1 Storage UFM 2 InfiniBand Fabric Compute Compute UFM 1 Compute UFM 2Jump Box (Optional) High-Speed Storage System Storage Home Filesystem Head Node 1 Login Node 1 Head Node 2 Login Node 2 Hot-Spare Management Nodes In-Band Network Fabric Out-of-Band Network Fabric HGX H100 SuperPod APLD Datacenter designed using NVidia’s HGX Reference Architecture offering Cutting Edge Compute, Storage, & Networking T E C H N I C A L A R C H I T E C T U R E Compute Nodes

APLD's OEM Equipment Partners

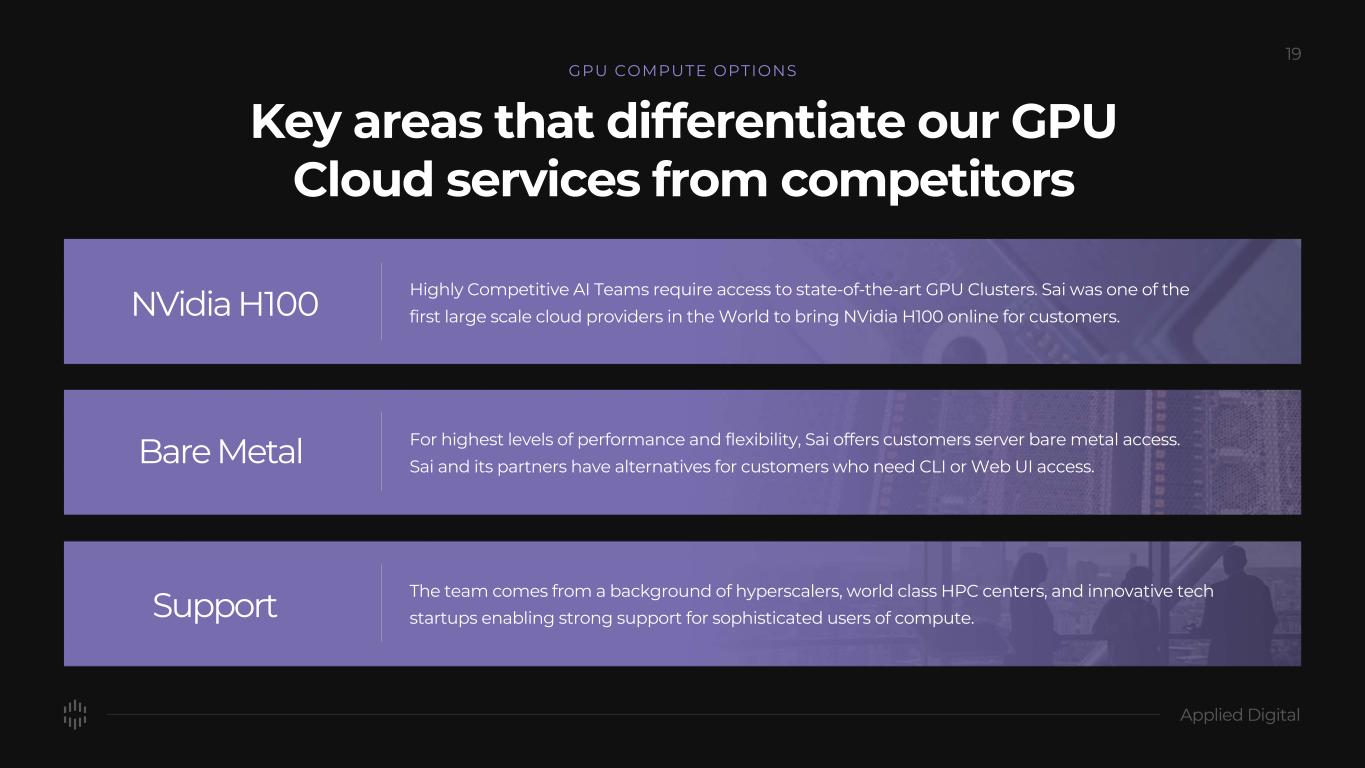

Support The team comes from a background of hyperscalers, world class HPC centers, and innovative tech startups enabling strong support for sophisticated users of compute. Bare Metal For highest levels of performance and flexibility, Sai offers customers server bare metal access. Sai and its partners have alternatives for customers who need CLI or Web UI access. NVidia H100 Highly Competitive AI Teams require access to state-of-the-art GPU Clusters. Sai was one of the first large scale cloud providers in the World to bring NVidia H100 online for customers. Key areas that differentiate our GPU Cloud services from competitors GPU COMPUTE OPTIONS

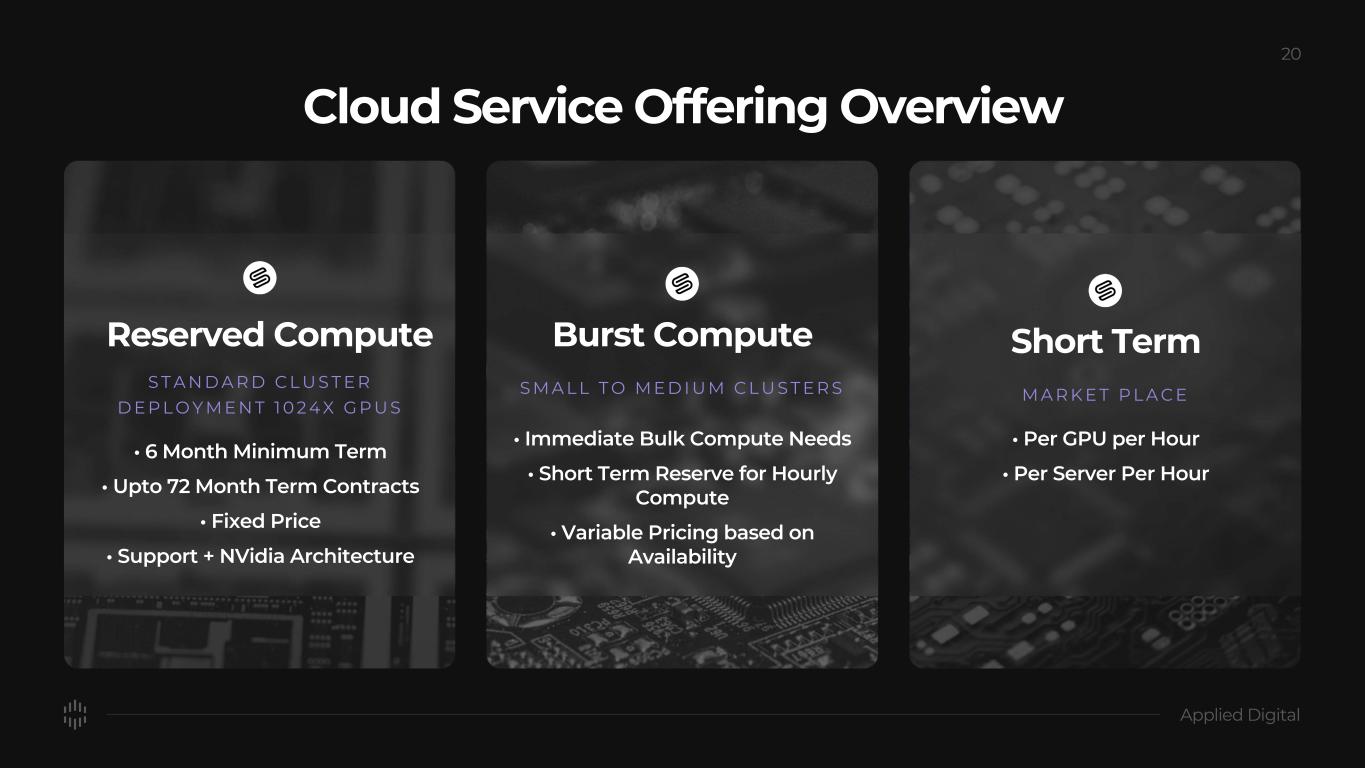

Reserved Compute S T A N D A R D C L U S T E R D E P L O Y M E N T 1 0 2 4 X G P U S • 6 Month Minimum Term • Upto 72 Month Term Contracts • Fixed Price • Support + NVidia Architecture Burst Compute S M A L L T O M E D I U M C L U S T E R S • Immediate Bulk Compute Needs • Short Term Reserve for Hourly Compute • Variable Pricing based on Availability Short Term M A R K E T P L A C E • Per GPU per Hour • Per Server Per Hour Cloud Service Offering Overview

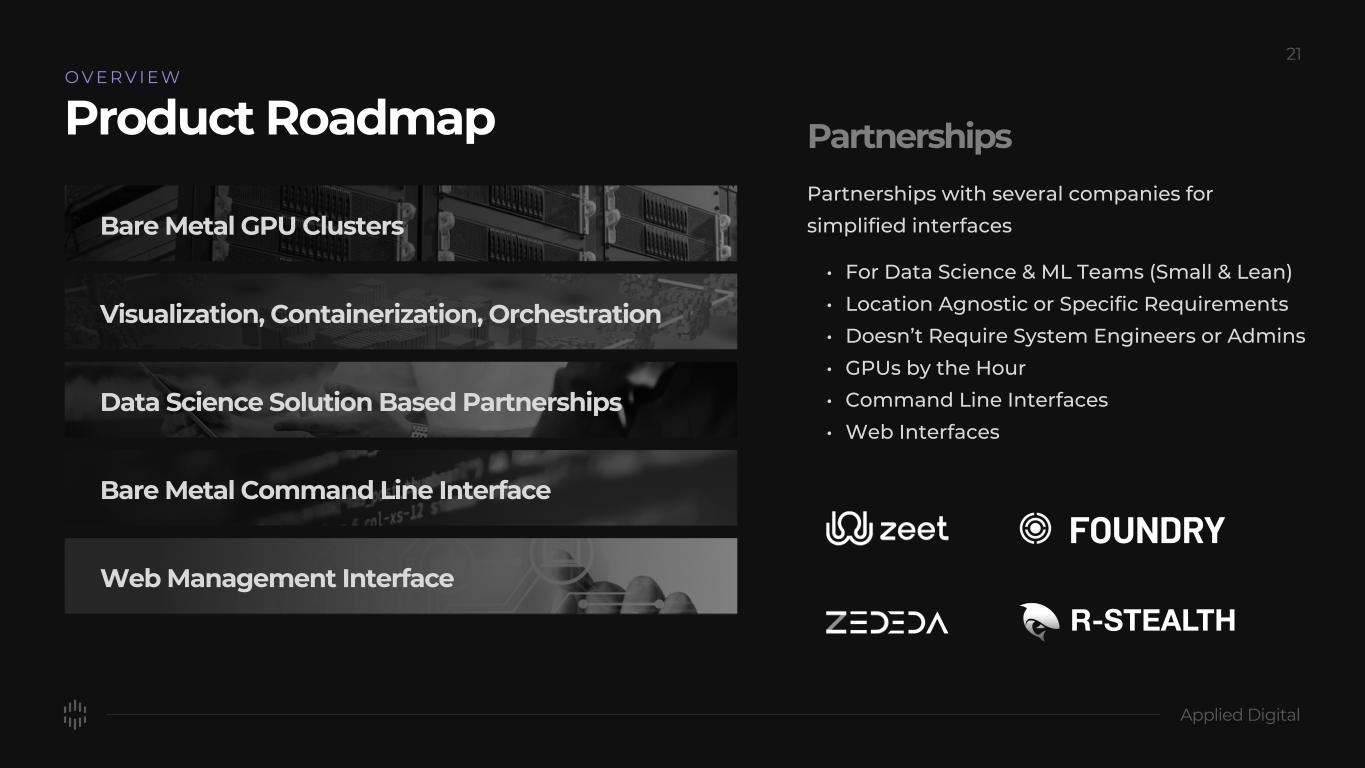

O V E R V I E W Product Roadmap Partnerships Bare Metal GPU Clusters Visualization, Containerization, Orchestration Data Science Solution Based Partnerships Bare Metal Command Line Interface Web Management Interface Partnerships with several companies for simplified interfaces • For Data Science & ML Teams (Small & Lean) • Location Agnostic or Specific Requirements • Doesn’t Require System Engineers or Admins • GPUs by the Hour • Command Line Interfaces • Web Interfaces R-STEALTH

Next-Gen HPC Datacenter Colocation Services

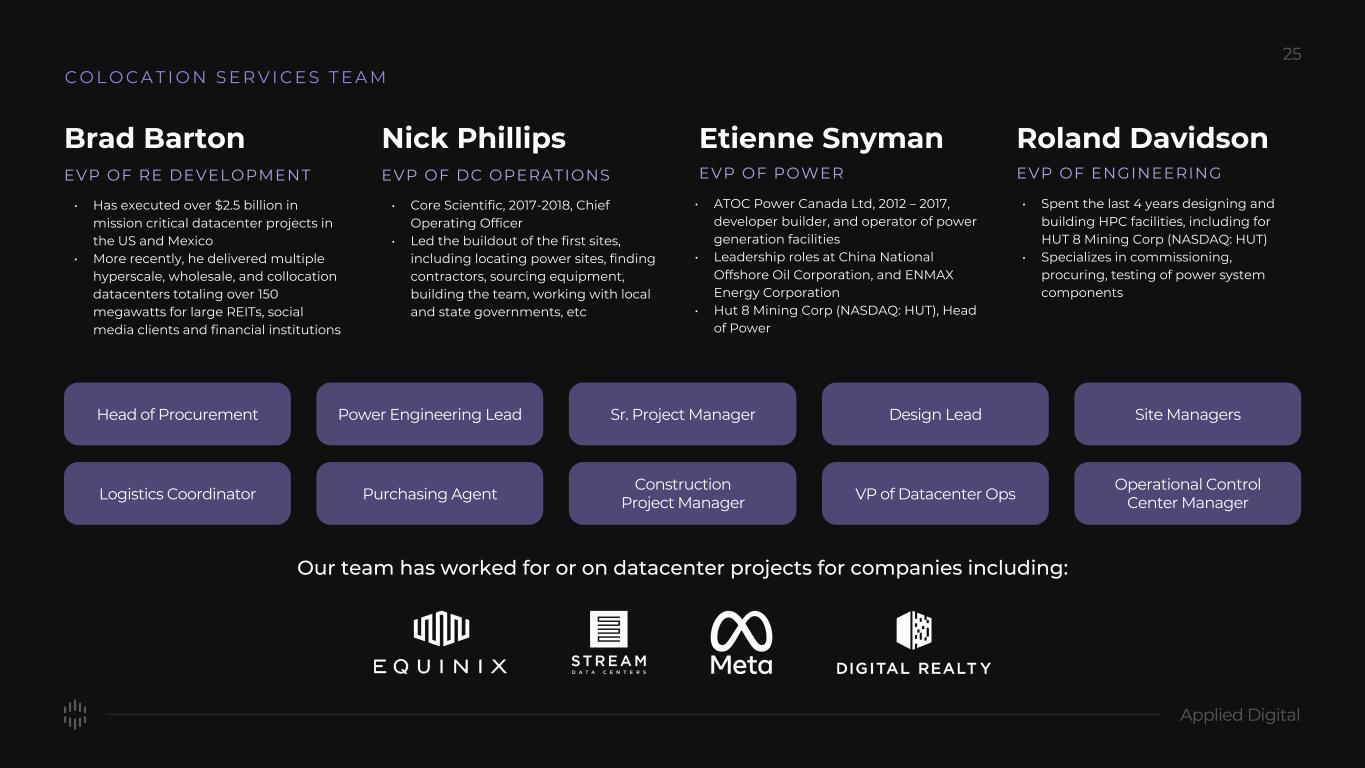

C O L O C A T I O N S E R V I C E S T E A M Our team has worked for or on datacenter projects for companies including: Head of Procurement Power Engineering Lead Sr. Project Manager Design Lead Site Managers Logistics Coordinator Purchasing Agent Construction Project Manager VP of Datacenter Ops Operational Control Center Manager Brad Barton EVP OF RE DEVELOPMENT • Has executed over $2.5 billion in mission critical datacenter projects in the US and Mexico • More recently, he delivered multiple hyperscale, wholesale, and collocation datacenters totaling over 150 megawatts for large REITs, social media clients and financial institutions Nick Phillips EVP OF DC OPERATIONS • Core Scientific, 2017-2018, Chief Operating Officer • Led the buildout of the first sites, including locating power sites, finding contractors, sourcing equipment, building the team, working with local and state governments, etc Etienne Snyman EVP OF POWER • ATOC Power Canada Ltd, 2012 – 2017, developer builder, and operator of power generation facilities • Leadership roles at China National Offshore Oil Corporation, and ENMAX Energy Corporation • Hut 8 Mining Corp (NASDAQ: HUT), Head of Power Roland Davidson EVP OF ENGINEERING • Spent the last 4 years designing and building HPC facilities, including for HUT 8 Mining Corp (NASDAQ: HUT) • Specializes in commissioning, procuring, testing of power system components

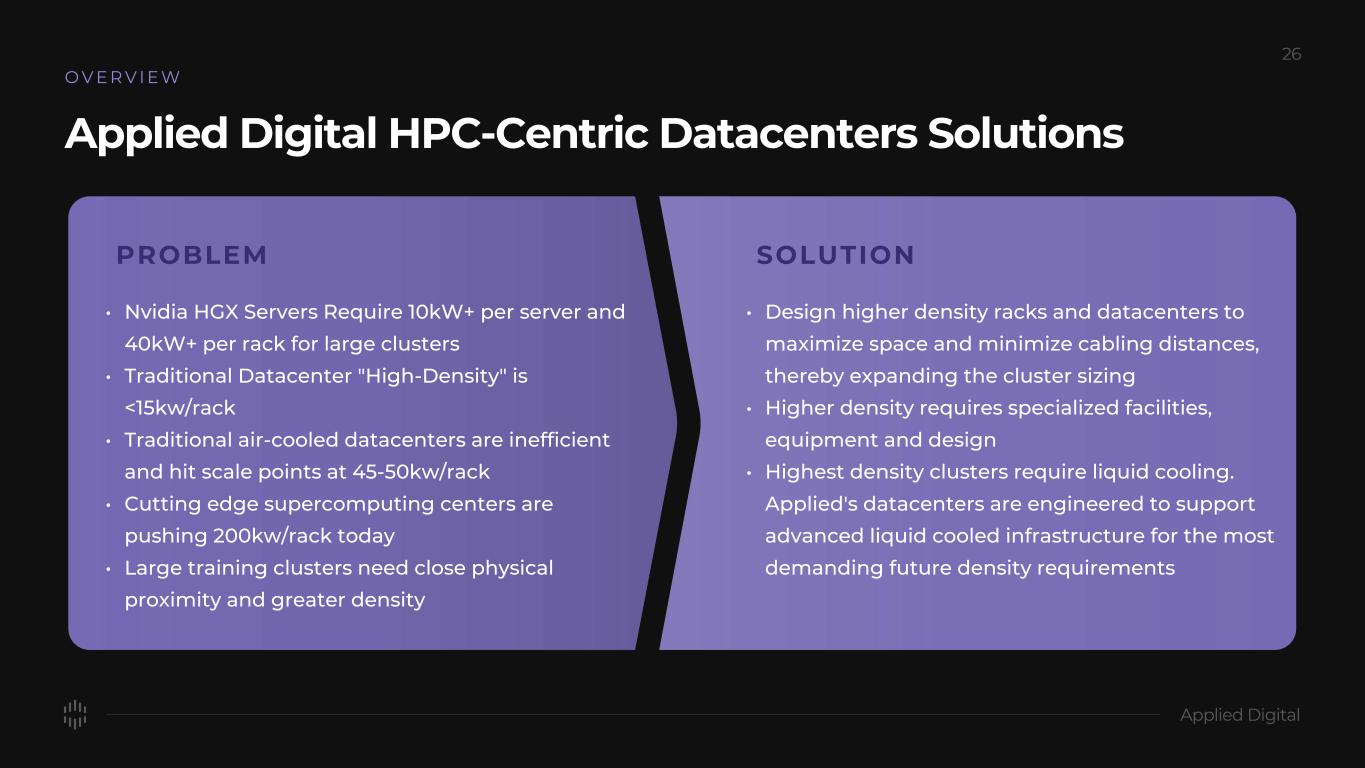

O V E R V I E W Applied Digital HPC-Centric Datacenters Solutions PROBLEM • Nvidia HGX Servers Require 10kW+ per server and 40kW+ per rack for large clusters • Traditional Datacenter "High-Density" is <15kw/rack • Traditional air-cooled datacenters are inefficient and hit scale points at 45-50kw/rack • Cutting edge supercomputing centers are pushing 200kw/rack today • Large training clusters need close physical proximity and greater density SOLUTION • Design higher density racks and datacenters to maximize space and minimize cabling distances, thereby expanding the cluster sizing • Higher density requires specialized facilities, equipment and design • Highest density clusters require liquid cooling. Applied's datacenters are engineered to support advanced liquid cooled infrastructure for the most demanding future density requirements

S T A T E - O F - T H E - A R T I N F R A S T R U C T U R E Applied Digital Datacenters focus on massive compute loads, high-density deployments and efficiency. A P L D ’ S C A M P U S I N C L U D E : o Dedicated Substation o Custom Office Space o Dedicated 24/7 Security Team o Customizable Access Controls o Cutting Edge Video Monitoring leveraging AI and Edge Analytics o Loading dock with Burn-In o Customer Storage Area o Centralized Operations Command Center D A T A H A L L F L O O R S D E S I G N E D F O R F L E X I B I L I T Y o Tailored to customer requirements for InfiniBand friendly deployments o Rack Densities from 45KW to 120KW can be deployed in a contiguous space o Cost effective electrical and mechanical fit out models o Data halls can be securely subdivided o Industry leading Power Utilization Efficiency AI Generated Image

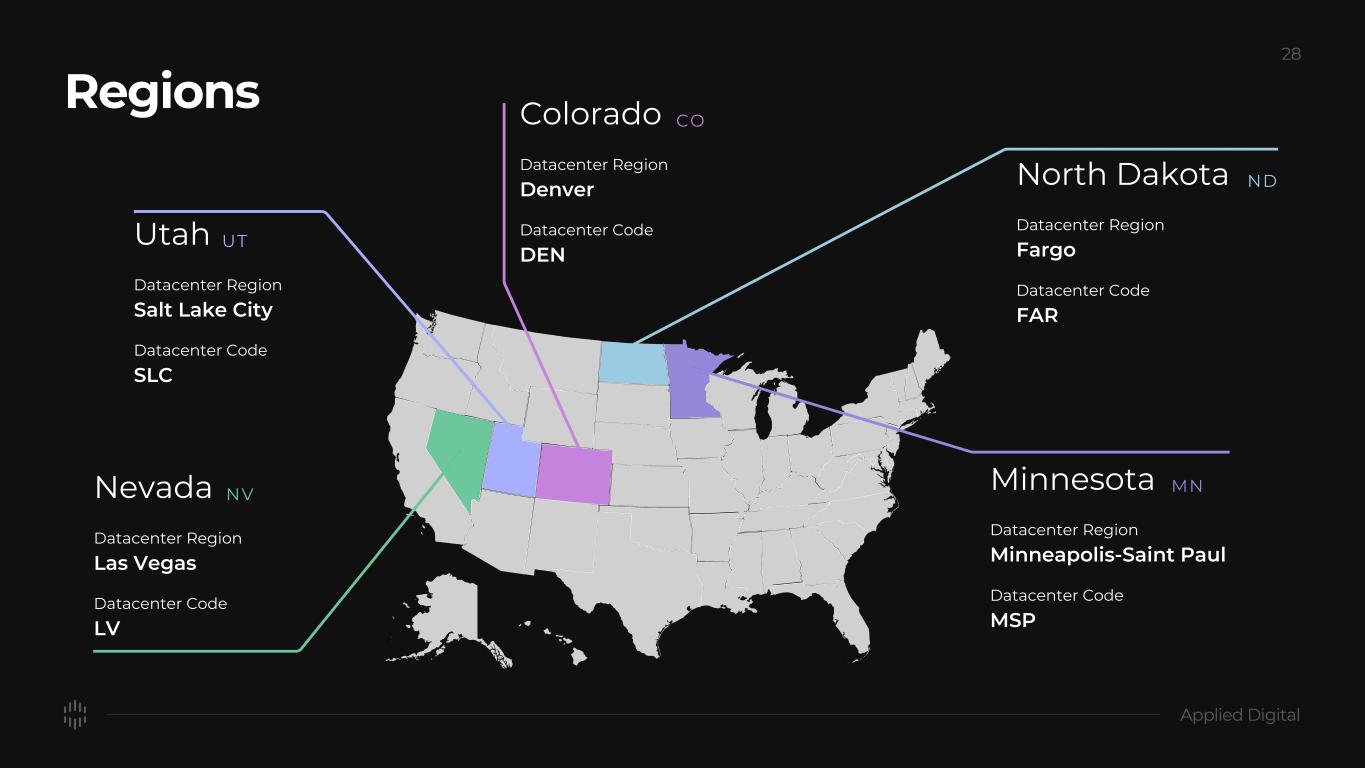

Regions Minnesota MN Datacenter Region Minneapolis-Saint Paul Datacenter Code MSP North Dakota ND Datacenter Region Fargo Datacenter Code FAR Colorado CO Datacenter Region Denver Datacenter Code DEN Utah UT Datacenter Region Salt Lake City Datacenter Code SLC Nevada NV Datacenter Region Las Vegas Datacenter Code LV

info@saicomputing.com info@applieddigital.com